The AI Power Dilemma: A Cost-Analysis of Three Grid-Level Survival Strategies

The AI power demand is making legacy data centers obsolete. This analysis breaks down the true 5-year TCO of the three primary survival strategies: retrofitting, on-site power generation, and building new in power-rich regions. Make your next investment with confidence.

A deep-dive financial analysis into the true cost of retrofitting, generating on-site power, or building new to survive the AI power crunch. This is the data you won't find in a press release.

Executive Summary

- The rapid integration of high-density AI workloads is pushing legacy data center power and cooling infrastructure to the breaking point, creating an existential threat to profitability and future growth.

- A direct financial comparison reveals that simply retrofitting existing facilities, while seemingly fast, carries a significant 5-year Total Cost of Ownership (TCO) due to complex engineering and inefficient power usage.

- Next-generation on-site power, specifically Small Modular Reactors (SMRs), presents the highest capital cost but offers long-term opex stability and grid independence, making it a viable, albeit costly, hedge.

- A strategic "Geographic Pivot" to new builds in power-rich, low-cost regions offers the most financially advantageous TCO, but introduces challenges related to latency, connectivity, and speed-to-market.

- The optimal strategy is not universal; it is a critical decision dependent on an organization's specific capital structure, risk tolerance, and long-term strategic goals.

Introduction: The AI Tsunami is Here

The digital infrastructure landscape is in the midst of its most profound shift in a generation. The promise of artificial intelligence, once a future concept, is now a present-day reality consuming power at a rate that is rendering a decade of data center design principles obsolete. Projections as of mid-2025 indicate that AI-specific workloads could account for up to 20% of all power consumed by U.S. data centers by 2030, a staggering increase from less than 5% just a few years ago.

This isn't a simple matter of needing more power; it's a crisis of density. Racks that were designed for 10-15 kW are now being asked to support 50-100 kW of power-hungry GPUs, creating thermal challenges that air cooling cannot solve. For operators and investors, this presents a multi-trillion dollar question: how do you adapt to survive the AI tsunami?

The Core Analysis: Three Survival Strategies

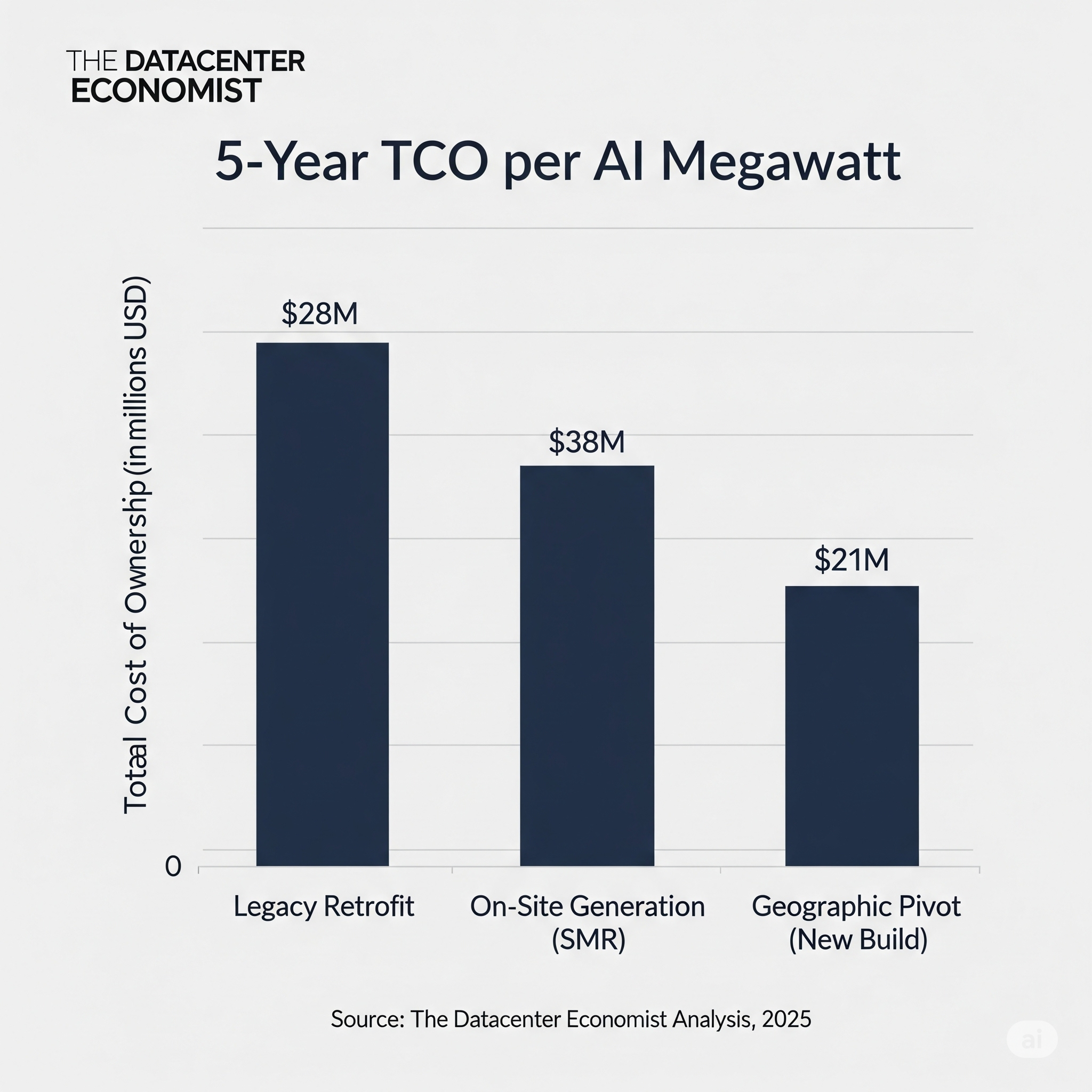

We have analyzed the 5-year Total Cost of Ownership (TCO) on a per-megawatt basis for three distinct strategic responses to the AI power dilemma.

Strategy A: The High-Density Retrofit

The most immediate option is to upgrade existing facilities. This involves significant and complex engineering: replacing legacy power distribution units (PDUs) and busways, upgrading switchgear, and, most critically, deploying advanced liquid cooling solutions like rear-door heat exchangers or direct-to-chip systems. While this avoids the time cost of new construction, the capital expenditure is immense. Our analysis indicates an all-in cost of $12-15 million per megawatt to achieve a stable, AI-ready state in a legacy facility. When factoring in the continued reliance on often-volatile grid power over five years, the TCO is significant.

Strategy B: The On-Site Power Generation Play

A forward-looking strategy is to achieve grid independence by co-locating data centers with dedicated power generation. While renewables are vital, they lack the 24/7 baseload capacity required for AI. The emerging leader in the carbon-free space is the Small Modular Reactor (SMR). Though still navigating regulatory hurdles, the projected levelized cost of energy from an SMR offers long-term stability and price predictability.

However, with individual SMR modules typically producing under 100MW, powering a future 1-gigawatt hyperscale campus would require a dozen or more units—a massive, multi-decade undertaking. This scalability challenge is why some of the largest operators are simultaneously evaluating a more traditional technology for on-premise baseload power: the combined-cycle natural gas turbine.

Natural gas offers a proven, reliable, and highly scalable power source capable of meeting gigawatt-level demand today. The trade-off, however, is critical: it comes with significant carbon emissions and a reliance on a volatile fossil fuel market. For companies with aggressive ESG (Environmental, Social, and Governance) commitments, building new fossil fuel infrastructure presents a substantial long-term risk to both regulatory compliance and brand reputation.

Therefore, the "On-Site Generation" strategy represents a fundamental choice between two cost profiles: the immense upfront capital expenditure of nuclear for a carbon-free future versus the faster, more scalable, but emissions-heavy approach of natural gas. The high TCO reflected in our chart is driven by the nuclear option; a natural gas plant would have a different profile with lower CAPEX but higher, more volatile fuel-driven OPEX and carbon-related financial risks.

Strategy C: The Geographic Pivot

The most financially compelling strategy on paper is to bypass legacy constraints entirely. Building new, AI-native data centers in emerging regions with abundant, low-cost power and favorable climates presents a clear TCO advantage. Markets in the U.S. Midwest, parts of Canada, and Scandinavia offer power rates that are often 30-50% lower than in constrained hubs like Northern Virginia or Silicon Valley. While the initial build cost is high, the long-term operational savings on power—the single largest opex component—are substantial. This strategy's primary challenges are network latency for end-users and the long timelines associated with greenfield construction.

5-Year TCO per AI Megawatt

This chart crystallizes the financial trade-offs. The long-term operational cost of power makes the Geographic Pivot the most compelling option from a pure TCO perspective, despite its higher initial capital outlay compared to a retrofit.

Conclusion: No Single Silver Bullet

While a Geographic Pivot presents the most attractive long-term financial case, it is not a universal solution. For enterprises with existing, strategically located facilities and a lower tolerance for latency, a targeted, high-density retrofit may be the only viable path. For hyperscalers with immense capital and multi-decade planning horizons, the stability of on-site generation could be the ultimate competitive moat.

Understanding the intricate financial trade-offs between these strategies is the first step in navigating the new, power-constrained world of AI infrastructure.